In modern Industry 4.0 environments, the demand for seamless data exchange between Operational Technology (OT) and Information Technology (IT) is increasing. Thereby, IT/OT interoperability in the Unified Namespace (UNS) refers to the ability to connect machine data and business systems through a common data platform. A UNS functions as a centralized, hierarchical data model — a single source of truth for all IIoT data across the enterprise. This enables authorized systems to access and process sensor readings, production metrics, and business parameters in real time. In this article, we outline the fundamentals of IT/OT interoperability, from the technical layers of data communication to best practices in a UNS architecture.

What is IT/OT Interoperability?

IT/OT interoperability is the capability to exchange data between OT systems (e.g., machines, PLCs, SCADA) and IT systems (e.g., MES, ERP, or cloud platforms) in a structured, consistent, and lossless way. The critical factor is not just the network connection, but the semantic and syntactic consistency of data across both domains. Within the context of Industry 4.0 and IIoT, this plug-and-play communication is essential to establish uninterrupted data flows — from sensors all the way to analytics, AI, or business applications. Without common standards, organizations are forced into fragmented point-to-point integrations, which are difficult to scale, costly to maintain, and prone to vendor lock-in.

The Data Access Model – Levels of Interoperability

To make the challenges of interoperability more concrete, it is useful to look at Matthew Parris’ Data Access Model (source). This framework breaks down data integration into six levels (0-5), ranging from basic connectivity to semantic object modeling. Each level represents a critical dimension of data access. True plug-and-play interoperability is only achievable if all levels are consistently aligned across systems.

Level 0 – Transport

The transport layer provides the basic network connection between endpoints. At this stage, no semantics or formats are involved, it is purely about reliably moving bits and bytes. Typical technologies at level 0 include:

- General transports: TCP/IP (connection-oriented, ordered delivery), UDP (connectionless, efficient, but no delivery guarantees-common in time-critical OT use cases).

- Industrial transports: RS-485, fiber optics, and other physical layers forming the basis of fieldbuses like Profibus.

Example: A PLC sends process values to the company network via TCP/IP. The IT side initially only sees IP data packets – without knowing whether they contain temperature or pressure values.

Level 1 – Protocol

On this layer, the communication logic is defined. Protocols build on transport to establish how messages are exchanged. Typical protocols include:

- IT standards: HTTP/REST (based on TCP/IP and defines methods such as GET, POST, PUT for data exchange).

- Open industrial protocols: PROFINET (real-time over Ethernet), EtherNet/IP (CIP-based over TCP/UDP), OPC UA (standardized services and address spaces), MQTT (lightweight publish/subscribe).

- Vendor-specific protocols: Beckhoff ADS (TwinCAT), Siemens S7 via RFC1006 (ISO-on-TCP).

Example: A machine publishes temperature values via MQTT. While level 0 ensures that the packets arrive, the MQTT protocol ensures distribution using topics in a publish/subscribe model.

Level 2 – Mapping

This level determines how the data is addressed and structured within a protocol. While the protocol (level 1) specifies the communication mechanism, the mapping determines which topics, paths or addresses are used to uniquely identify data. This defines where a certain value can be found in the data stream. Examples

- IT mappings: In RESTful APIs, unique URL paths are used as mappings (e.g. GET /api/machines/123/telemetry) to uniquely address resources and their data points.

- Open industrial protocols: PROFINET uses index/slot mechanisms (GSDML models), EtherNet/IP uses CIP assembly instances, OPC UA defines NodeIds as addressing, MQTT relies on hierarchical topic names (e.g. factory/line1/machine3/temp), but does not specify the structure of the payload.

- Vendor-specific mappings: Beckhoff ADS addresses variables via IndexGroup/IndexOffset or symbolic names; Siemens S7 uses PLC data block addresses (DB number, byte/bit offset) to uniquely identify variables.

Example: A PLC publishes the value 25.3 °C. With plain MQTT, this value could end up in the topic factory/line1/machine3/temp – the payload is e.g. the string“25.3“. Without prior agreement, recipients can read the topic, but do not necessarily know whether “25.3” is a temperature in °C, °F or something completely different.

Level 3 – Encoding

Encoding defines how mapped data is serialized into bytes for transport. This choice directly impacts bandwidth, latency, CPU load, and error handling. Common encodings are:

- General formats: JSON (human-readable, flexible), XML (highly structured via XML schema, but verbose and memory-intensive).

- Industry formats: OPC UA Binary (efficient, binary), MQTT payloads often JSON or compact protocol buffers (Protobuf) – the latter is schema-based, binary and very performant, but requires careful management of the schemas.

- Vendor formats: Beckhoff’s ADS telegram (fixed header incl. IndexGroup/Offset), Siemens S7 data telegrams (function codes, DB addresses, etc.).

Example: An OPC UA client sends a read request for the NodeId ns=2;s=temperature. This request is binary coded. The response from the server contains the temperature value as a 32-bit float – e.g. the byte sequence 41 80 00 00 (which represents 16.0 in IEEE 754).

Level 4 – Values

This level defines the actual data types and ranges that may occur in a message. The value can only be interpreted correctly if the sender and receiver understand the same data type. While level 3 specifies how messages are transported, level 4 determines what values are transmitted. Typical data type definitions:

- Primitive types: Boolean, Integer, Float/Double, String.

- Complex types: Arrays, structures/objects with nested fields.

- Standardized types: OPC UA built-ins (Boolean, Int16, Float, String, DateTime, GUID), PROFINET/EtherNetIP process data types.

- PLC environments: IEC 61131-3 types (BOOL, INT, REAL, STRING), plus vendor-specific extensions (e.g. Beckhoff, Siemens).

Example: A machine reports the status “Motor running” as a Boolean to a control system. Semantics: true if the motor is switched on and false if it is stopped. Both sides must agree that this value is a Boolean and what true/false mean in each case.

Level 5 – Objects

The top level describes the organization of data in objects and models. While the mapping (level 2) only defines where a single value is located, the object level is about how several values logically belong together and what attributes, properties or methods they have. In this way, structured information models are created from isolated variables, which also fully represent complex machines or processes. Examples of object-oriented data models:

- OPC UA Companion Specifications: Domain-specific models (robots, CNC machines, Weihenstephan standard).

- Asset Administration Shell (AAS): Describes industrial assets as a digital administration shell with standardized submodels.

- VDA 5050: Standardized communication interface for AGVs/AMRs, defining a shared object model for navigation, orders, and status independent of vendor.

Example: The OPC UA Companion Specification for Robotics defines a standardized information model for industrial robots. It describes a robot arm as an object with attributes such as JointPosition, ToolCenterPoint or OperationMode. Regardless of whether the robot comes from manufacturer A or B – both systems provide the same object type with the same properties.

IT/OT Interoperability in the Unified Namespace (UNS)

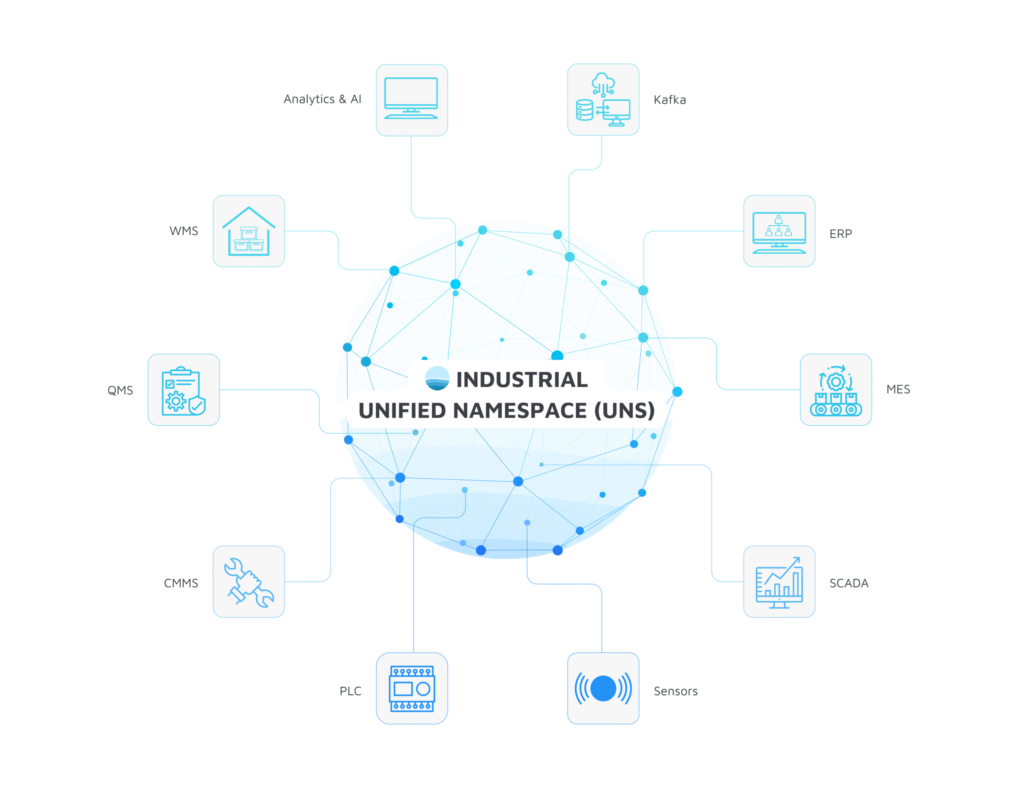

Having explored the levels of interoperability, the key question is how to unify them in practice. This is where the Unified Namespace (UNS) comes into play: a central data architecture that connects IT and OT systems through a common hub. Unlike the traditional ISA-95 pyramid model, which enforces data exchange only between adjacent layers, the UNS aggregates all information into a single, centralized hub. In most implementations, this hub is a publish/subscribe bus —typically an MQTT broker— through which devices and applications publish and subscribe to data streams.

A UNS fully decouples producers and consumers: systems no longer integrate point-to-point but simply connect to the broker. This simplifies the onboarding of new data sources and the replacement of consumers — for example, when migrating MES or dashboard solutions. At the same time, the UNS provides a company-wide single source of truth for IT and OT, ensuring consistency across all layers of the enterprise. An introduction to the concept in the context of Industry 4.0 can be found here.

Challenges in Implementation

It is crucial for IT/OT architects to understand that no single protocol covers all levels of interoperability (see here the comparison of OPC UA and MQTT). While MQTT is valued for scalability and simplicity, it only standardizes transport (Level 0) and protocol (Level 1) in the Data Access Model. All higher layers — mapping, encoding, data types, and object models — remain undefined and must be specified explicitly. Simply declaring “MQTT” as the UNS standard will not deliver interoperability. In practice, this leads to common problems:

- Non-uniform topics (Level 2): Without rules, each system defines its own topic structures. Consumers must be adapted individually.

- Undefined payload (Level 3): Devices choose their own data format, JSON, XML, or binary, forcing consuming systems to parse or transform payloads case by case.

- Inconsistent data types and semantics (Level 4): Sensors encode the same variable differently—temperature as a float in °C, an integer with scaling, or a string. Consumers risk misinterpretation and processing errors.

- No common object model (Level 5): Machines expose data as flat tags or nested object trees. Consumers must build custom logic for each device to normalize the data into a usable model.

Without binding specifications, organizations typically end up with proprietary integrations. These may solve immediate problems but fail to scale — resulting in higher long-term integration costs, maintenance overhead, and lock-in risks.

Strategies in Practice and Their Limitations

Because no single industry standard consistently spans all interoperability levels, organizations have adopted different strategies. Each approach offers benefits but also comes with significant trade-offs:

- Use case-specific strategy: Ad-hoc specifications are defined for individual projects or pilots.

- Advantage: Fast implementation, low initial hurdle.

- Disadvantage: No reusability – over time, leads to a fragmented patchwork of isolated solutions.

- Company-specific strategy: Internal conventions are defined for topics, payload formats, and data types.

- Advantage: uniform communication within the company.

- Disadvantage: oes not create interoperability with external partners. Requires effort for enterprise-wide requirements analysis and governance.

- Open standards: Initiatives such as Sparkplug B or OPC UA Companion Specifications provide standardized models for certain layers.

- Advantage: Enable cross-vendor interoperability, assuming all participants implement the standard consistently.

- Disadvantage: Adoption is often limited. The applicability must be carefully evaluated. Example: Sparkplug B is best suited for SCADA environments.

Best Practices

For a UNS to deliver true interoperability, companies need a binding rule set that applies to all publishers and subscribers. This rule set should define each interoperability level according to the Data Access Model. Two guiding principles are essential:

- Align with open standards: Use established models such as ISA-95 or OPC UA Companion Specifications as a reference and adapt them to your own requirements – for example by using consistent naming rules for UNS objects.

- Minimize options: Restrict variability at each level to what is strictly necessary. Example: define JSON as the default format, with Protobuf co-existing in the namespace only where bandwidth or performance requirements justify it.

Recommendations per level:

- Level 2 – Topic structure (mapping): Define a consistent, hierarchical naming convention, e.g. based on ISA-95 (location / area / line / machine / signal). This ensures clarity in the namespace and prevents uncontrolled growth. Detailed best practices can be found here.

- Level 3 – Encoding: Specify a standard payload format, typically JSON. Allow a binary format such as Protocol Buffers in parallel only for bandwidth-sensitive scenarios. You can find more information here.

- Level 4 – Data types: Harmonize the data types for measured values, meter readings and status messages. Limit the set to what is minimally required (read more here).

- Level 5 – Object models: Where available, adopt standard information models (e.g., OPC UA Companion Specifications such as the Weihenstephan standard) and extend them only where business needs require. Please find our step-by-step guide here.

In addition to technical specifications, organizational rules are crucial: Define who is authorized to introduce new topics or data points, how change management is handled, and which security practices apply. These should include authentication and authorization at the broker, IT/OT network segmentation, and continuous monitoring of data traffic.

Conclusion

IT/OT interoperability in the Unified Namespace is a key concept for future-proof industrial architectures. With a UNS, the previously rigid layers of automation can be transformed into a flexible, event-driven namespace. This namespace connects all participants – from sensor control to cloud analytics – in real time. Yet success requires more than connectivity alone. Only when common standards are enforced across all levels — from transport and protocols to semantics and object models — can a UNS realize its full potential. Companies should therefore define these standards early. Wherever possible, companies should build on open, established standards to prevent isolated solutions and vendor lock-in.

For IT/OT architects, this means mastering both the technical foundations (networks, protocols, encodings) and information modeling. When done effectively, the result is a truly end-to-end data infrastructure where new machines, applications, or analytics systems can be integrated on a plug-and-play basis. The upfront effort in standardization pays off over time through faster integration projects, reduced engineering overhead, and consistently higher data quality — laying the groundwork for the smart factory.