AI models and AI agents are increasingly entering industrial automation. They analyze production data, detect anomalies, and over time are expected to initiate control actions. But there is a fundamental architectural gap between a large language model (LLM) and a running production line: How does an AI system gain structured, secure, and contextualized access to real-time data in the Unified Namespace (UNS)? Until now, companies have solved this with custom integrations such as direct UNS subscriptions, custom REST wrappers, or proprietary connectors. The result is a growing number of fragile and poorly maintainable point-to-point integrations. The Model Context Protocol (MCP) provides a standardized solution. This article explains how MCP acts as the architectural integration layer for AI in the Unified Namespace and what design decisions IT/OT architects must make.

What is the Model Context Protocol (MCP)?

The Model Context Protocol (MCP) is an open standard that defines how AI models communicate with external tools and data sources. It was introduced by Anthropic in 2024 and is now supported by a growing ecosystem. The core idea is simple: instead of wiring each data source individually to an AI model, MCP provides a standardized interface. It can be compared to USB-C for AI systems.

The Three Core Building Blocks of MCP

MCP structures communication around three concepts.

- Tools are executable functions that an AI model can invoke. For example, a tool called get_machine_status retrieves the current machine state from the UNS. Tools support both read and write operations.

- Resources are contextual data provided to the AI model. In a UNS architecture, resources may represent topic hierarchies, signal metadata, units, data types, and update rates.

- Prompts are reusable query templates that standardize recurring tasks. For example, a shift report prompt can automatically aggregate relevant KPIs from the UNS.

How MCP Differs from Traditional APIs

MCP is not a replacement for REST or GraphQL. It operates at a different abstraction layer. While REST exposes fixed endpoints, MCP dynamically describes server capabilities. AI models can discover and use available tools at runtime. If the underlying data structure changes, the MCP interface remains stable. This is a fundamental shift for AI integration.

Why MCP in the Unified Namespace (UNS)?

The Unified Namespace solves the data availability problem. All relevant OT and IT data flows into a centralized Broker-based architecture, creating a single source of truth. This works well for dashboards, MES, and ERP systems. However, AI systems have different requirements.

Three Architectural Challenges for AI in the UNS

- The first challenge is contextualization. A UNS topic such as site01/area02/line03/machine04/temperature contains a numeric value. But AI models require context: unit, valid operating range, signal semantics, and asset identity. This metadata often exists but is distributed across topics. AI must know where and how to retrieve it.

- The second challenge is access control. MQTT ACLs operate at topic level. For AI use cases, this is often insufficient. An agent might be allowed to read temperature values but not modify recipe parameters, even if both exist in the same topic branch. AI requires role-based, operation-level control.

- The third challenge is action capability. Read-only AI has limited value. As soon as AI must trigger notifications, adjust setpoints, or create maintenance tickets, it needs validated and controlled interfaces. Direct UNS publishes without validation introduce serious security risks.

Without a Standard: Spaghetti Architecture

Without MCP, each agent builds its own integration logic. One agent uses a Python MQTT client. Another consumes a REST wrapper. A third reads directly from a database. Each new AI system increases complexity. The result is point-to-point architecture, the exact problem the UNS already solved at the data layer.

MCP extends the UNS principle to the AI layer by providing standardized and centralized access instead of custom integrations.

Reference Architecture: MCP Server in the UNS

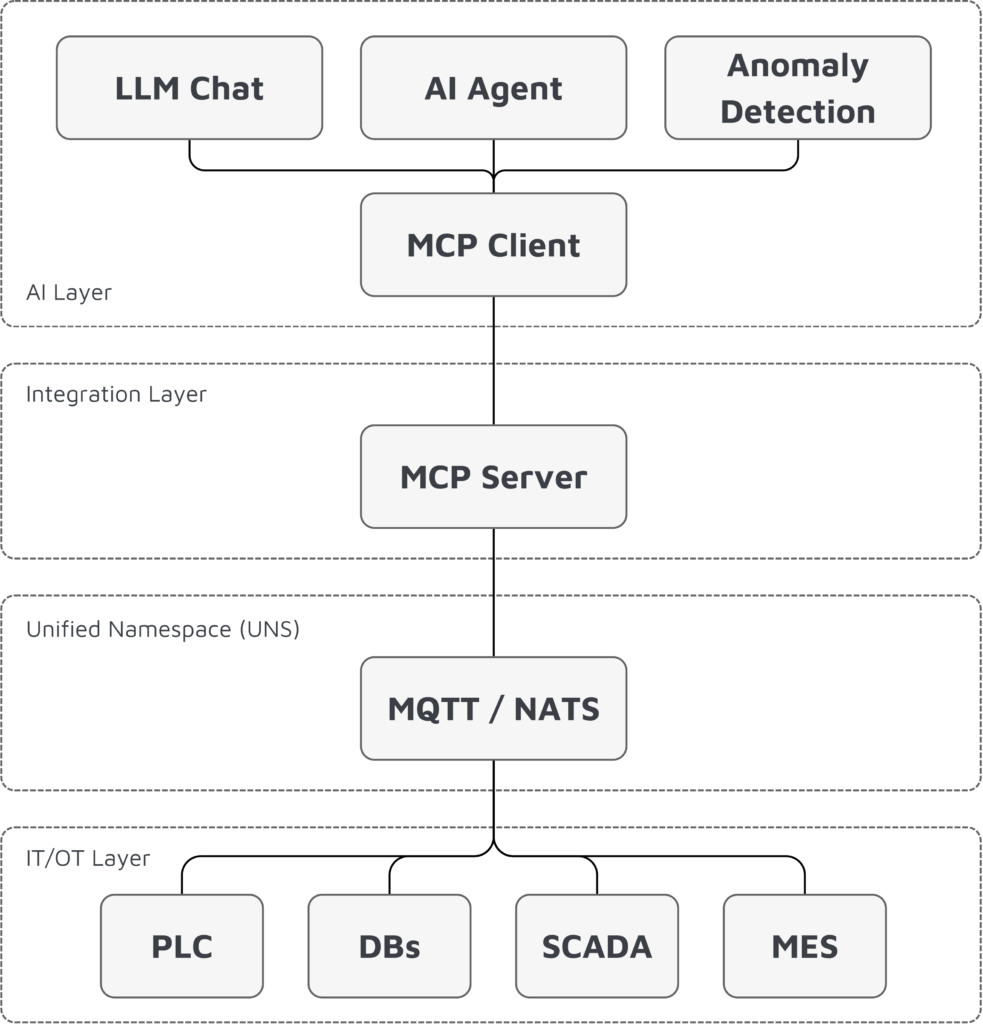

The following architecture shows how the MCP server acts as a controlled intermediary between AI agents and the UNS.

Architecture Overview

Components in Detail

MCP server (UNS gateway): The MCP server is the central component. It connects to the message broker, understands the UNS topic hierarchy, exposes MCP tools and resources, translates AI tool calls into UNS subscriptions or publishes, and validates write operations.

MCP client: The MCP client runs within the AI runtime or agent framework. It discovers available tools and resources and exposes them to the AI model while handling communication with the MCP server.

Tools: Controlled Access to the UNS

The MCP server defines tools as controlled access points to the UNS:

| tool | Tool description | Access |

|---|---|---|

get_machine_status |

Current status of a machine | Read |

query_production_kpi |

Key production figures over time | Read |

list_active_alarms |

Open alarms of certain assets | Read |

set_target_value |

Set setpoint of a controlled variable | Write |

create_maintenance_ticket |

Create maintenance order | Write |

Each tool has a strict input and output schema. The MCP server validates parameters before executing UNS publishes. The AI cannot send arbitrary payloads to the UNS.

Resources: Providing Context to the AI Model

Resources supply structured context for the AI model:

- Topic hierarchy: The complete structure of the UNS, including ISA-95 levels (enterprise / site / area / line / cell)

- Data dictionary: Description of the available signals with units, value ranges and update frequencies

- Operational context: shift schedules, recipes, production orders – information that an AI model needs to interpret measured values correctly

Positioning Within ISA-95

The MCP server operates between Level 3 (MES/MOM) and Level 4 (ERP/Business). It consumes data from all UNS levels but exposes it in a filtered and contextualized way. It preserves the UNS principle that each layer consumes what it needs without tight coupling.

MCP vs. Direct UNS Integration

Not every application requires MCP. The following table will help you decide.

| Criterion | Direct UNS | MCP server |

|---|---|---|

| Standardization | Proprietary per agent | Open standard |

| Contextualization | Manual, per agent | Centrally in the MCP server |

| Access control | MQTT ACLs (topic level) | Tool level + validation |

| Write accesses | Uncontrolled | Validated via tool schema |

| Model interchangeability | Re-implement agent | MCP interface remains stable |

| Initial complexity | Low | Medium |

| Scaling at >3 Agents | Exponentially increasing | Linear |

When Is Direct UNS Sufficient?

For simple, single-agent, read-only use cases with limited scope and no governance requirements, direct UNS integration may be sufficient. For example, a basic dashboard bot that summarizes production figures.

When Does MCP Become Essential?

MCP becomes relevant as soon as:

- Multiple AI agents access the UNS (reuse of tool definitions)

- Write accesses are necessary (validation and authorization)

- Models are to be exchanged (change from GPT to Claude to open source without integration conversion)

- Compliance requirements exist (auditing of all AI access via a central layer)

At that point, MCP becomes infrastructure rather than an optional enhancement.

Current Limitations of MCP

While the Model Context Protocol (MCP) shows significant promise, IT/OT architects and enterprise security teams must consider several critical limitations:

1. No Standardized Role and Policy Model

MCP currently lacks a mature, standardized RBAC/ABAC model at the protocol level. Authorization is typically implemented within individual MCP servers. For enterprise environments with Zero-Trust architectures, centralized IAM, and fine-grained policies, this creates significant integration overhead. Enterprise integration challenges:

- Additional integration effort in Zero-Trust architectures

- Difficult integration with centralized Identity & Access Management (IAM)

- Missing support for fine-grained policies in enterprise contexts

2. Insufficient Auditability and Traceability of AI Decisions

MCP enables tool-level logging but does not define a standardized structure for tamper-proof audit trails or AI decision provenance.

Critical for regulated industries: Sectors such as pharmaceutical, automotive, or food & beverage mandate complete traceability and change histories. MCP currently offers no out-of-the-box solution for compliance-ready documentation.

3. Credential Management & Secrets Exposure

MCP servers require privileged credentials — API keys, database credentials, OAuth tokens — for backend system integration. However, a standardized secrets management strategy is currently missing. Concrete security risks:

- Credentials are often stored insecurely in config files or environment variables

- Risk of exposure through logs, error messages, or compromised MCP servers

- Successful attack enables lateral movement into connected systems

4. Maturity Level and Classification for IT/OT Architects

MCP is an emerging standard. Industrial-grade reference implementations, certified components, and long-term supported enterprise distributions are still under development. This requires careful risk assessment for production-critical OT environments. MCP should currently be viewed as a governance and integration layer for AI use cases—not as a security-certified OT component. Organizations deploying MCP in production must rigorously implement security-by-design, network segmentation, human-in-the-loop mechanisms, and centralized identity integration.

Best Practices for MCP in the UNS

The following best practices show how to implement an MCP server in the Unified Namespace in a secure and scalable way.

1. Human in the Loop (HITL): Controlled Write Operations

Write tools should not execute autonomously in production-critical environments. Instead of direct write execution:

- Agent generates a recommendation for action

- MCP server marks the operation as “pending approval”

- Human approval (e.g. shift supervisor) via UI or workflow system

- Only then is the write call to the UNS executed

HITL Recommendations:

| Scenario | HITL Recommendation | Evaluation |

|---|---|---|

| Pure monitoring | Not required | AI only reads data |

| KPI analysis | Not required | No process change |

| Optimization suggestions (without execution) | Recommended | The decision remains with the person |

| Production-relevant parameter changes | Absolutely necessary | Influence on quality, OEE, rejects |

| Safety-critical processes | Mandatory required | Personal or plant safety affected |

| Recipe or batch changes | Mandatory required | Compliance and traceability relevant |

2. Security: Defense in Depth

Treat the MCP server as a security-critical component. Clearly separate read-only tools from write tools and assign authorizations per agent role. A monitoring agent only has read access. An optimization agent is only granted write access to defined target values – never to the entire topic hierarchy.

Additionally: Implement rate limiting at tool level. An AI agent that makes 1,000 get_target_value calls per second in a loop indicates a problem – not a legitimate use case.

3. Granularity: Not Too Fine, Not Too Generic

Avoid a 1:1 mapping between UNS topics and MCP tools. A tool get_temperature_machine_04 is too specific. Better: get_machine_signal(machine_id, signal_name) with parameters. At the same time, a single tool query_uns(topic) is too generic – it bypasses the validation and contextualization layer.

Align on daily operations: What would a shift supervisor query? Machine status report, production key figures, open alarms. These are useful tool granularities.

4. Contextualization: Always Return Metadata

In addition to the raw value, each tool response should also contain the unit, timestamp, normal range and signal source. An AI model that only receives the value 87.3 can do little with this. A response such as {"value": 87.3, "unit": "°C", "range": [60, 95], "source": "site01/area02/line03/oven01/temperature", "timestamp": "2025-01-15T14:23:00Z"} provides the necessary context for a well-founded analysis.

5. Governance: MCP Server as the Only Gateway

AI agents must never communicate directly with the UNS broker. The MCP server is the single controlled entry point. This enables:

- Central logging of all AI accesses

- Uniform authorization

- Consistent validation

- Easier debugging in the event of agent misbehavior

6. Monitoring and Audit

Log every tool call including agent ID, timestamp, tool name, parameters, and result. These logs support debugging, compliance documentation, and optimization of tool definitions. Usage patterns reveal architectural improvement opportunities.

Conclusion

MCP closes a critical architectural gap in the Unified Namespace by enabling standardized AI integration. Instead of individually wiring each AI system to the Message Broker, an MCP server provides controlled access, schema validation, centralized governance, and structured contextualization. For IT/OT architects, this results in three clear recommendations:

- Plan MCP as a standard component from the beginning.

- Design tools around operational functions rather than topic structures.

- Implement governance, logging, and access control in the first iteration.

The MCP ecosystem is evolving rapidly. Industrial-grade MCP servers tailored to UNS architectures are the logical next step. Architects who establish this foundation today will be prepared for scalable, compliant, production-ready AI integration tomorrow.