In today’s manufacturing industry, the seamless integration of IT and OT systems is a critical success factor. In this context, the Industrial Unified Namespace (UNS) architecture is playing an increasingly important role. At its core lies data modeling, which defines how data is structured and made accessible within the UNS. Effective data modeling is essential to ensure interoperability, scalability, and high data quality. This article provides a practical, step-by-step guide to developing and implementing data models in the UNS.

Why is data modeling essential?

Before the Internet of Things (IoT) era, operational technology (OT) primarily focused on real-time monitoring and control. Systems were built for robustness and efficiency, with little regard for advanced data analytics or complex IT/OT integration. Data was typically used for specific purposes and often organized in manufacturer-specific, proprietary data models. With the rise of Industry 4.0, this approach has fundamentally shifted. Companies now recognize the immense value of structured and standardized data, particularly within architectures like the Unified Namespace (UNS). Data modeling has become a cornerstone of this paradigm, offering significant advantages, such as:

- Interoperability: Modern manufacturing environments consist of a large number of IT/OT systems from different vendors. Data modeling establishes a common language and structure for seamless communication between these systems within the Unified Namespace (UNS). This ensures:

- Seamless integration of different systems

- Compatibility across the entire ecosystem

- Reduction of communication barriers: Standardized data models ensure uniformity and clarity. The advantages are:

- Improved data quality through defined semantics and data formats

- Consistency and clarity reduce errors and lead to more reliable decision-making

- Scalability and flexibility: Manufacturing systems are constantly evolving. Data modeling creates a scalable basis that:

- facilitates the integration of new technologies and systems

- Offers flexibility for changing requirements

What is data modeling in the context of the Unified Namespace (UNS)?

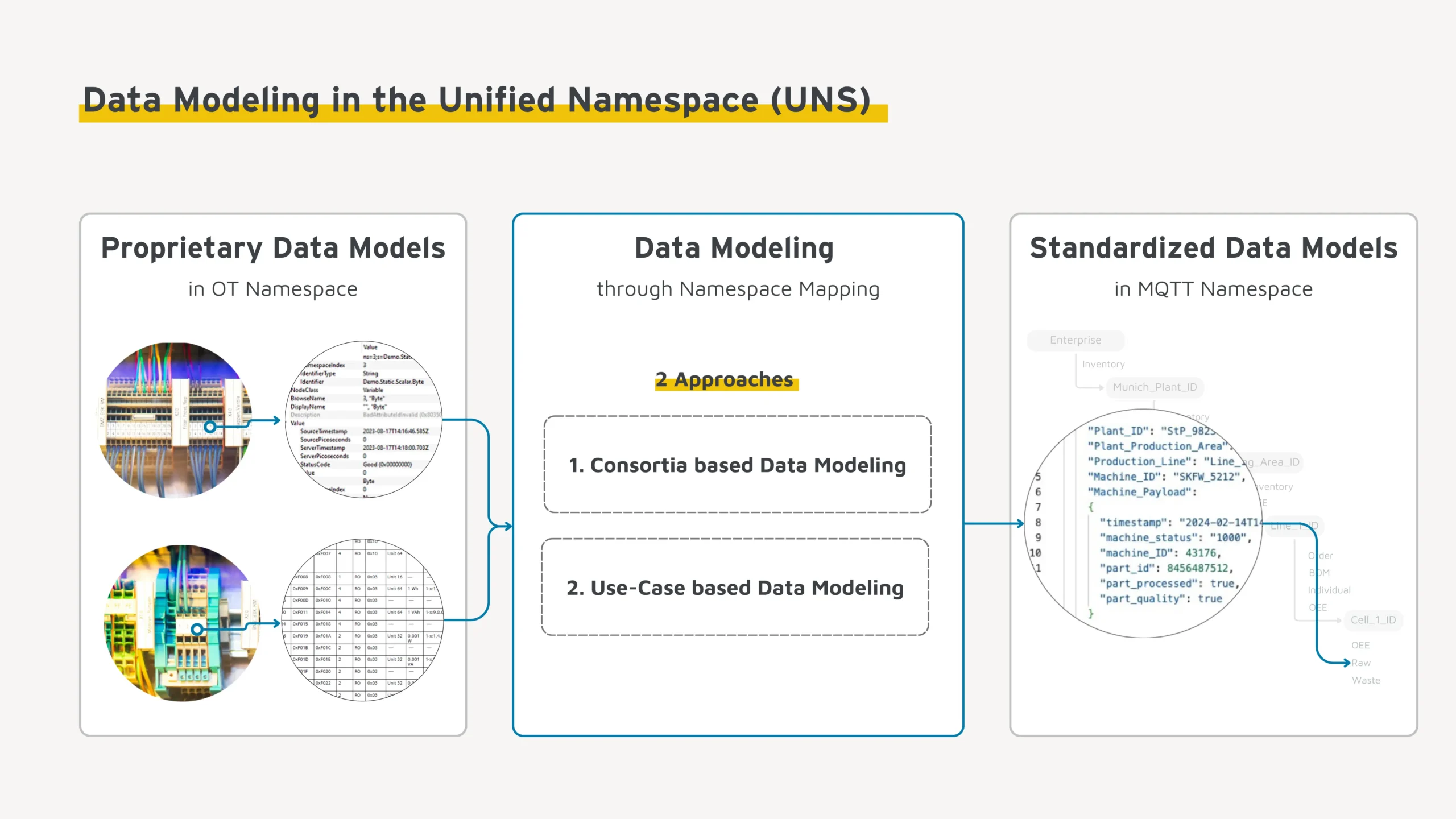

Data modeling is the process of representing data in a structured way in a specific context, e.g. the Industrial Unified Namespace (UNS). Its goal is to create a platform-independent model that clearly defines data, its attributes, and relationships. This approach fosters a unified understanding of data across the organization, bridging the gap between OT, IT, and business experts.

Data modeling builds trust in both the data and its applications but requires a resource investment. This investment, however, is well worth it, as it provides stability, adaptability, and scalability for the Unified Namespace (UNS). In practice, two common approaches to data modeling are used, each with its own advantages and disadvantages.

1. Consortia-based data modeling

This approach relies on standardized data models collaboratively developed by industry consortia or organizations, such as OPC UA Companion Specifications.

- Pros

- Compliance with industry best practices

- Easier integration with external partners and systems thanks to uniform standards across the entire industry

- Assumed acceleration in implementation

- Cons

- High complexity significantly hinders practical applicability and implementation

- Limited usability, as the models are often designed for very general requirements.

- Adaptation to specific needs can be difficult.

2. Use-case based data modeling

In this approach, the data model is specifically tailored to individual use-cases or company-specific requirements.

- Advantages

- High flexibility, as the model is under your own control.

- Highly effective, as it is precisely tailored to the specific requirements.

- Fast implementation, as only relevant data and relationships are taken into account.

- Cons

- Risk of limited company-wide standardization due to the potential development of platform-dependent, individualized data models

- Low compatibility with cross-company, external systems.

- Higher maintenance costs, as individual models have to be regularly adapted and maintained.

Note: Due to the high complexity and the limited widespread adoption of consortia-based data models, both approaches are often combined in practice. This leads to use-case based data models that incorporate the best practices and standards established by consortia. The following section outlines this approach in detail.

What is a data model?

A data model serves as the foundation of data modeling, defining the structure, organization, and relationships of data within the Unified Namespace (UNS). It determines how data is structured, presented, and interpreted by systems and applications. A data model typically defines key aspects such as:

- Entities

- Describe the basic data objects that are represented in the Unified Namespace (UNS).

- Examples: Machine, sensor, production order

- Attributes

- Describe the properties of the entities.

- Examples: static properties (e.g. manufacturer information), dynamic properties (e.g. timestamp, status or temperature).

- Relationships

- Define the relationships and connections between entities and their attributes.

- Example: A production order consists of several sub-orders (order net)

- Data types

- Specify which type of data an attribute can contain.

- Examples: Integer, string, boolean, timestamp.

Step-by-step guide: Data modeling in the Unified Namespace (UNS)

Creating a data model may seem complex at first, but taking a step-by-step approach and focusing on specific use cases can make the process much more manageable.

Phase 1: Conceptual data modeling

In this phase, the relevant entities and attributes are identified at a conceptual, abstract level. These are then refined into a detailed, technology-independent model. The goal is to create a conceptual model that aligns with the requirements of your use-cases. To achieve this, follow these steps:

1. Prioritize use-cases

Prioritize the most relevant use cases for your Industrial Unified Namespace (UNS) by conducting a cost-benefit analysis. Consider the specific requirements of key stakeholders, including:

- OT experts (e.g. control engineers): Are familiar with factory systems (e.g. machines or central PLCs) and know how to access the systems.

- IT experts (e.g. IT manager of factory or corporate IT): Understand how to access corporate or cloud systems (e.g. Tableau or ERP) and know how to provide data for these systems.

Start with a straightforward use-case that is easy to implement and offers significant benefits. A common example is asset health monitoring, which helps track machine conditions and reduce downtime. Simple use-cases provide an excellent foundation for developing a data model while ensuring it meets practical, real-world requirements.

2. Identify relevant data categories

Identify the key data categories relevant to your use-case. Machine data can generally be grouped into three main classifications, each tailored to specific application scenarios:

- Telemetry data

- Includes the transfer of raw data to higher levels (e.g. for cloud analysis).

- It contains raw data (e.g. real-time temperature, high-frequency vibration measurements) as well as important metadata such as the timestamp of the measurement.

- Example use-case: Asset monitoring and analytics.

- Command and control data

- This category was traditionally limited to the production level due to its time sensitivity. However, advancements in hybrid edge-to-cloud architectures are now enabling the automation of control actions at higher levels.

- Messages typically contain product order details, recipe and process parameters.

- Example use-case: Automated decision cycles (closed-loop) for continuous, automated adaptation and optimization of production processes.

- Management data

- Play an important role in comprehensive machine management.

- Contain dynamic and static metadata (e.g. machine ID, manufacturer, machine configurations, information on last maintenance).

- Example use-case: Maintenance management

Each data category has specific requirements for communication and processing, such as criticality, frequency, or target systems. Representing these categories in separate data models can be highly beneficial. This approach allows for better differentiation between critical and non-critical communication, improved segmentation, and the ability to define appropriate access rights for each category.

3. Determine relevant IT/OT systems

Identify relevant data source and target systems for the use-case and clarify the following points:

- Which entities are relevant for the use-case (e.g. machines)?

- Which attributes are necessary to implement your use-case? E.g. operating mode of the machine (Automatic, Idle, Stopped, Error).

- What data is available in the source system and how can it be retrieved (e.g. via xml files, OPC-UA)?

- How are the target systems accessed and how is the data received? E.g. via a REST API or via database queries.

- Is there a special data format or data type that your target system expects (e.g. JSON or buffer)?

- How often does the data need to be updated and what triggers the update (e.g. event-based or cyclical)?

Focus initially on the specific requirements of the prioritized use-case. Over time, the data model can be adapted and gradually expanded to accommodate additional needs.

4. Define the data model

Define the data model for the identified entities. Where applicable, use established industry standards, such as OPC UA Companion Specifications, as a reference. Be sure to define the following elements:

- Attribute name

- Define the name of the attributes. Ensure consistency in all your data models.

- Example: An attribute such as CncOperationMode should be named identically in all data models.

- Description

- Add additional context to make the attribute interpretable.

- Example for the variable CncOperationMode: Value=’0′: Automatic; Value=’1′: Idle; Value=’2′: Stopped; Value=’3′: Error.

- Unit of the variable

- Make sure to keep the units of attributes consistent across all data models.

- Example: Temperature always in °C.

- Default values

- Default values can be relevant if: the target system has to start with predefined states during initialization; fallback values are necessary if certain data is missing.

- Example: 1 (= Idle) as the default value for CncOperationMode.

- Data type

- Make sure that data types are consistent across all data models.

- Example: Integer for CncOperationMode.

Phase 2: Physical data modeling

During the physical data modeling phase, data from real-world objects (e.g., machines) is mapped to the attributes of your conceptual model. This process results in standardized physical models (digital assets) that can be published within the Unified Namespace (UNS). For a detailed guide, please refer to our technology-specific step-by-step instructions, including:

1. Publication in the Unified Namespace (UNS)

Define where the data should be published within the MQTT topic hierarchy. Follow best practices and guidelines for the design of the topic hierarchy.

2. Validation of the data model

Validating the data model ensures it meets the requirements of the use-case. Conduct end-to-end testing to verify correct implementation. Connect the target system to the Unified Namespace (UNS) and confirm that the data model fulfills all its requirements. Once validation is successful, the data model and use-case can be deployed productively.

3. Iterative adjustment

Data modeling within the Unified Namespace (UNS) is an ongoing process, not a one-time task. Data models must be continuously adapted to meet evolving requirements. Iterative updates ensure the data model remains relevant and aligned with current needs. Implementing version control allows you to track changes and maintain a clear history of the data model’s evolution. To ensure long-term quality and consistency of data in the UNS, establish clear guidelines and responsibilities for creating and updating data models.

Conclusion

The Industrial Unified Namespace (UNS) architecture is essential for the seamless integration of IT and OT systems in modern manufacturing. Data modeling serves as the foundation for achieving interoperability, scalability, and high data quality. A step-by-step approach, combined with a focus on specific use-cases, simplifies the implementation process and enables companies to enhance their data architecture sustainably over time.